For stream processing workloads, you should use the provided Flink Connector instead. This provides support for interacting with Spark for batch processing workloads, allowing the use of all standard APIs and functions in Spark to read, write, and delete data.

Spark scala how to#

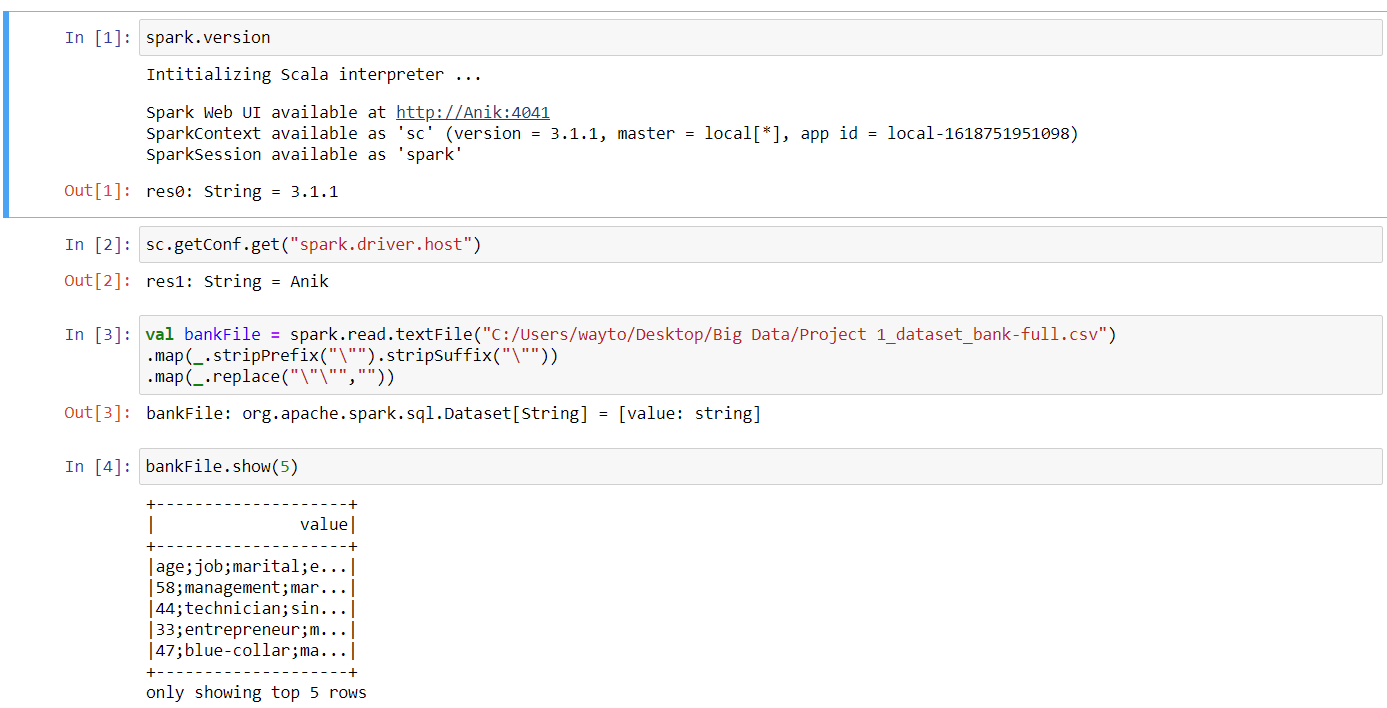

The example in this tutorial demonstrates how to use the Spark Connector provided by the Data Client Library. Prerequisites: Organize your work in projects Objectives: Understand how to use the Spark Connector to read and write data from different layers and data formats in a catalog. UnionMultipleDf().Use Spark Connector to read and write data # PySpark - Using unionMultipleDf function # use reduce to combine all the dataframesįinalDf = reduce(unionDfWithMissingColumns, DfList) UnionDfWithMissingColumns = lambda dfa, dfb: dfa.unionByName(dfb, allowMissingColumns=True) # create anonymous function with unionByName Parameter: DfList - a list of all dataframes to be unioned This function combines multiple dataframes rows into a single data frame # PySpark - Union Multiple Dataframes Functionĭef unionMultipleDf(DfList: List) -> DataFrame: In such situation, it is better to design a reuseable function that can efficiently handle multiple unions of dataframes including scenarios where one or more columns can be missing or where columns names can be different.Īn example of such function is presented below using Python's functools library reduce function for PySpark dataframes and Scala's reduceLeft function for Spark dataframes in Scala. In a real-world use case that require combining multiple dynamic dataframes, chaining dataframes with several union functions can look untidy and impractical. | USA| null| null|North America| Imperial| |Country|Capital|Language| Continent|Measurement_System| PDf1.unionByName(pDf4, allowMissingColumns=True).show() Setting the parameter to False will result in error. Note that in the example highlighted below, Continent and Measurement_System fields missing in the first dataframe, have been added to the union because the parameter allowMissingColumns has been set to True. In this case, the missing values will be filled with nulls while the missing columns will be included in the schema at the end of the union result.

The parameter also allows unioning of disparate dataframes with unequal columns. Additionally, UnionByName has an optional parameter allowMissingColumns which when set to True can allow combining dataframes with different column names as long as the data types remain the same. UnionByName is different from both Union and UnionAll functions in that it resolves columns by name not by position as done by Union and UnionAll.

PDf1.union(pDf2).union(pDf3).union(pDf4).show(truncate=False) To deduplicate, include distinct() at the end of the code. The union result may contain duplicate records. Union in Spark SQL API is equivalent to UNIONALL in ANSI SQL. Union function expects each table or dataframe in the combination to have the same data type. They combine two or more dataframes and create a new one. These two functions work the same way and use same syntax in both PySpark and Spark Scala. Val sDf4 = spark.createDataFrame(Seq(("USA", "North America", "Imperial"), ("UK", "Europe", "Metric"))).toDF("Country", "Continent", "Measurement_System") Val sDf3 = spark.createDataFrame(Seq(("Mexico", "Mexico City", "Spanish"), ("Gambia", "Banjul", "English"), ("Angola", "Luanda", "Portuguese"))).toDF("Country", "Capital", "Language") Val sDf2 = spark.createDataFrame(Seq(("Brazil", "Brasilia", "Portuguese"), ("Egypt", "Cairo", "Arabic"), ("Guinea", "Conakry", "French"))).toDF("Country", "Capital", "Language")

Val sDf1 = spark.createDataFrame(Seq(("France", "Paris", "French"), ("China", "Beijing", "Mandarin"), ("Germany", "Berlin", "German"))).toDF("Country", "Capital", "Language") We will also cover a specific use case that involves combining multiple dataframes into one. In this post, we will take a look at how these union functions can be used to transform data using both Python and Scala. In Spark API, union operator is provided in three forms: Union, UnionAll and UnionByName. The function has since been included in all major analytics tools and platforms. Traditionally, union functionality was implemented as one of the inbuilt functions in ANSI SQL engine to manipulate query results. One of the recurring tasks in data engineering and machine learning workloads is unioning or combining one or two tables, queries or dataframes into one distinct single result containing all the rows from the tables in the union.

0 kommentar(er)

0 kommentar(er)